The Last Human Generation to Live in a World Where We're the Smartest

- hej0305

- Aug 9, 2025

- 11 min read

Updated: Aug 11, 2025

AI Growth Is on Track to Outrun the Universe Itself

What if the greatest force shaping your future wasn’t political, economic, or even human? What if it was intelligence—non-biological, ever-accelerating, and soon beyond our comprehension?

Artificial Intelligence is not just advancing. It’s compounding. Every few months, it doubles in intelligence. And this isn’t a fluke—it’s the continuation of a 600,000-year trend tracing back to the first spark of neural life. Now, it’s entering a phase so explosive that, if current growth rates hold, AI will surpass the total computational capacity of the known universe in just 300 years.

That’s not science fiction. It’s math.

We are experiencing an explosion in the development and use of Artificial Intelligence. This technology will change our world much faster and more radically than most people realize. We can predict the growth of computational intelligence which follows the accelerating trend of biological intelligence dating back to the first organisms with neural systems 600,000 years ago.

The historical growth of intelligence on earth has been exponential and the rate of improvement has increased significantly with the introduction of current LLM technology. We have a difficult time understanding accelerating exponential growth and underestimate how quickly the future will change. The exponential growth in computational intelligence has recently increased by an order of magnitude and the projections for the future are frankly mind blowing.

This article will give you a better understanding of how exponential growth works, what the growth trend of intelligence is, and what the accelerating pace of growth predicts for the future. Finally, we will present how that growth will be limited by the need for energy as well as other resources and what potential technologies could allow continued exponential growth for decades to come.

PART I

INTELLIGENCE HAS BEEN GROWING EXPONENTIALLY FOR BILLIONS OF YEARS

We like to think of progress as random or chaotic. It’s not.

The rise of intelligence—from the first spark of life to AI—has followed a startlingly predictable curve. As Ray Kurzweil shows in The Singularity Is Nearer, when you chart breakthrough innovations on a logarithmic timeline, something eerie emerges - they line up.

The timeline of intelligence isn’t a messy scribble—it’s a straight arrow, aimed at the future, moving faster and faster.

The Time Between Innovations Is Shrinking

Life first appeared 4 billion years ago.

It took 2 billion years to evolve complex (eukaryotic) cells.

Jump to modern history: the telephone to the internet took under 100 years.

And AI? It went from niche lab project to world-changing force in just a couple of decades.

What once took eons now happens in years—even months. We are riding a wave that’s growing steeper by the day. Every innovation triggers a growth in total planetary intelligence. First this intelligence is seen in the number of increasingly sophisticated organisms. More recently, it has been seen in the increase in electronic machines.

So Where Are We Headed?

If the past is predictable, what can we expect next? The future isn’t a fog. It’s a roadmap hidden in plain sight. Let’s visualize the roadmap for exponential growth and if there are limits or forks in the road.

The Brain vs. the Machine: Who’s Winning Right Now?

For now, the smartest thing on Earth is still you. The human brain, sculpted over 600,000 years of evolution, is the most powerful engine of intelligence we know. But for how much longer?

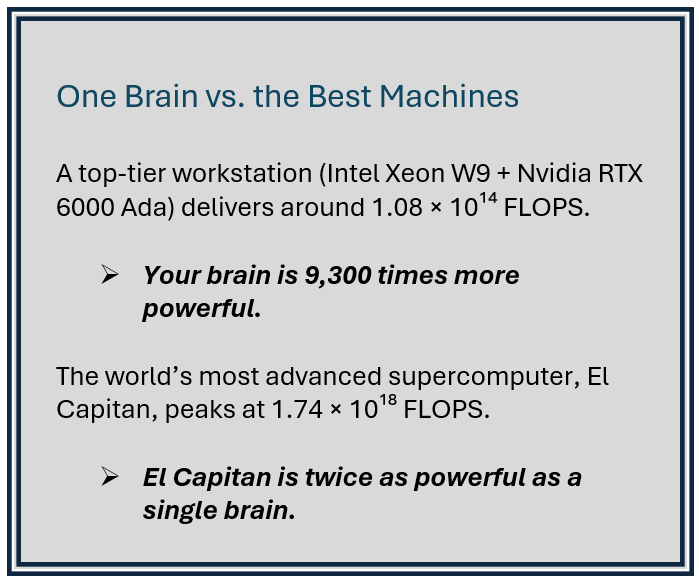

Scientists have been racing to estimate the brain’s raw processing power. Using a standard benchmark for computers called FLOPS (floating point operations per second), the latest high-end estimates suggest the brain operates at 1 quintillion FLOPS - that’s 1 × 10¹⁸.

Let’s put that in perspective. Impressive? Yes. But here’s the catch:

Your brain runs on just 20 watts—about the power of a dim lightbulb.

El Capitan gulps down 30 megawatts—1.5 million times more power.

If we tried to match human intelligence with hardware alone, we’d burn the planet down.

All Human Brains vs. All Intelligent Systems

In 2025, there are 8.2 billion humans. Multiply that by one brain’s estimated power (1 × 10¹⁸ FLOPS), and you get 8.2 × 10²⁷ FLOPS – the total power of biological intelligence on Earth. Now compare that to the combined total of all chip-based intelligent systems worldwide of 3.2 × 10²¹ FLOPS.

But here’s the twist, machines are doubling every few months. Biology doesn’t stand a chance in a race like that. We’re not just approaching parity—we’re speeding toward a flip. And when that happens, everything changes.

PART II

YOU CAN’T TRUST YOUR GUT WITH EXPONENTIAL GROWTH

It Doesn’t Just Get Big—It Gets Unimaginably Big

The human brain is terrible at picturing exponential growth. We're wired for straight lines and steady progress—not curves that explode skyward. But if you want to understand what’s happening with AI—and why the future is arriving faster than you think—you must understand how doubling works.

Let’s break it down.

If your money grows at 5% a year, it’ll double in about 15 years. But if it grows at 15%, you’ll double it in just 6 years—and in 15 years, you’ll have 7 times your starting amount.

Now imagine a doubling that keeps happening—not once or twice, but dozens of times. That’s when growth goes from impressive to incomprehensible.

The Rice-on-a-Chessboard Thought Experiment

Picture a chessboard. It has 64 squares. You place 1 grain of rice on the first square. Then 2 on the next. Then 4. Then 8. You double the number on each square all the way to square 64.

Before you read on, take a moment to guess how much rice is on the board at the end? A bag? A silo? A mountain?

By the 64th square, you’ll have over 18 quintillion grains of rice. That’s more than 500 million metric tons—almost the entire worlds rice production. And it gets crazier: if you added just one more square, the 65th, it would match the total of all 64 previous squares combined.

This is the power of exponential growth. It starts slowly and then it detonates.

Now - Apply That to Intelligence

From 2010 to mid-2024, the compute used to train cutting-edge AI models has doubled every 5 months. If that doubling continues, we’ll hit 64 doublings—chessboard territory—by 2052.

That means the growth in intelligence isn’t just fast. It’s devastatingly fast. And we’re already past the point where intuition can keep up.

Still think you know what’s coming?

AI Is No Longer Following Moore’s Law—It’s Blowing Past It

For decades, we’ve used Moore’s Law to predict the future of computing—chips doubling in performance every 2 years. It was fast. It felt like a revolution. But today’s AI isn’t following that script. It’s accelerating at a pace Moore never imagined.

Since 2019, the global stock of AI compute has been doubling every 10 months—an annual growth rate of more than 2×. That’s the backbone behind the rapid rise of models like GPT-4, Claude, and Gemini. And that’s just the average.

The most powerful AI training runs—so-called “frontier models”—have been doubling every 5 months since 2010. That means they’ve been getting 4× more powerful every single year.

Here’s how the growth compares:

Curve | Doubling Time |

Moore’s Law (Classic chip speed) | 24 months |

Hardware Compute Growth (AI-wide) | 10 months |

Frontier Model Training Compute | 5 months |

AI Problem-Solving Capability (METR test) | 7 months |

And if the doubling continues, we’ll soon be living in a world where machines can solve problems we can’t even imagine—at a speed no human can keep up with.

The question now isn’t if AI will surpass us. It’s what happens after it does.

The End of the Line? Even the Universe Has Limits

So far, we’ve talked about exponential growth as if it could go on forever. But physics says otherwise. Even the universe—that vast, black sea of stars and silence—has a maximum capacity for intelligence. The laws of quantum mechanics, relativity, and thermodynamics place a hard ceiling on how much computation is physically possible inside the observable cosmos. This isn’t science fiction. It’s math from the deepest layers of theoretical physics.

According to MIT physicist Seth Lloyd, and earlier work by Margolus, Levitin, and Bremermann, here’s what the entire universe looks like as a giant computer:

Processing Speed: ~10¹⁰³ operations per second (yes, that’s a 1 followed by 103 zeroes)

Memory (RAM): ~10¹²² bits

Total Computation Since the Big Bang: ~10¹²¹ FLOPs

That's it. There is a limit to how much computation can be done and therefore how much intelligence can exist according to our current scientific understanding The cosmic hard drive is finite. No clever code, no superintelligence, no alien civilization can go beyond it.

Let’s do a thought experiment. Today, in early 2025, the total computational power of all AI systems on Earth is around 5.5 × 10²¹ FLOPs. Now assume we keep doubling every 7 months—a pace that AI models are already demonstrating. How long until we hit the theoretical computational capacity of the universe?

We’re already climbing that mountain, fast. But well before that limit is tested the physics of the universe introduces limitations.

PART III

PRACTICAL LIMITS WELL BEFORE THE UNIVERSE’S CEILING

AI’s rapid march toward greater intelligence isn’t constrained by theory—it’s constrained by power. At today’s pace, the collision course is clear:

Energy use: A 10 GW AI footprint in 2025. Doubling compute every 10 months would consume all of today’s global electric grid output in ~15 years—and match all human energy use in ~20.

Efficiency gains: Koomey’s Law doubles operations per watt every 1.65 years, but in fewer than 100 years, physics hits the Landauer limit, beyond which no chip can get more efficient. Before that, AI power use would surpass the Sun’s luminosity (4 × 10²⁶ W), then the combined output of every star in the observable universe.

The truth is we’ll hit real-world limits far sooner—chip supply, cooling, grid capacity, capital cost, policy restrictions, and the slow fade of efficiency gains as we approach voltage and memory floors.

The US Power Gap

If AI’s growth rate continues—even after factoring in better inference efficiency—U.S. electricity demand from AI and data centers (DC's) will overshoot planned capacity within a decade. Keep in mind that adding significant grid resources has a 10-year planning horizon.

And AI won’t be the only competitor for those electrons: EVs, electric heating, and advanced automation will all be growing significantly and drawing from the same grid.

Heat dissipation is the other silent killer. Every 1 GW of fossil or nuclear power throws off 2 GW of heat. Nuclear runs even hotter. Heat must be dumped somewhere — into air, water, or land — and near dense populations it will drive up cooling-water demand, thermal pollution, and lethal heat zones long before CO₂-driven climate change reaches its peak.

Policymakers and engineers already treat energy as AI’s dominant choke point. Without breakthroughs, exponential AI growth will flatten into an S-curve long before we approach physics’ ceiling.

Technologies That Could Extend AI’s Exponential Growth

It is likely that money, and geopolitics will maintain current AI growth rates for another 10–15 years. AI power will rival today’s global grid by the mid-2030s lifting the eventual peak.

In the short term we already see the building of gigantic data centers built in rural areas with dedicated non-grid power, a trend that will accelerate. But after mid-century, physics, land-use, and infrastructure drag assert themselves, bending even the most lavishly funded exponential into an S-curve.

To keep feeding the AI curve, we’ll need step-change improvements—beyond the incremental gains in grid planner’s projections. Technologies in development now which can address the ravenous need for energy are:

Algorithmic Breakthroughs – Not just better training pipelines, but radical new approaches. Example: GPT-3.5 inference costs dropped 280× in under two years, translating into >100× energy savings per year. These types of breakthroughs are step functions that are impossible to project.

Reversible/Adiabatic Logic – Chip designs that recycle most of the energy normally lost as heat, edging closer to the Landauer limit. Quantum computing offers inherent reversibility.

Cryogenic Computing & Off-Planet Data Centers – Space offers near-absolute zero temperatures, eliminating most resistive losses and easing cooling constraints.

Neuromorphic Chips – Brain-inspired processors that can be up to 10,000× more efficient than today’s von Neumann architectures.

Fusion Power – Near-limitless energy yet but still dumping 1.2–1.9 GW of heat per GW generated, with massive water requirements.

Why Artificial Intelligence Will Continue to Grow Aggressively

By 2040, AI could rival the cognitive capacity of all humans without our biological bottlenecks, but it is hardly a fair fight. AI processes pure cognition, with instantaneous access to the sum of human knowledge, operating far faster than any brain.

They who control AI will benefit from its economic yield. It is a race, and the winner will be the group that is able to feed AI with energy which intelligence turns into economic value.

Chips, algorithms, and IP can be copied or stolen. Energy is bound to geography. Reality tilts the long game toward nations with vast land, cooling capacity, and power generation potential. Space will be the ultimate frontier, moving the heat off a warming planet.

History is unkind to less capable competitors. Whether AI merges with humanity (as Kurzweil and Musk envision) or supplants it, intelligence tends to dominate. In nature, humans reduced wild mammal biomass by 85%; today, 96% of mammal biomass is us and our livestock.

The next few years will look deceptively manageable but major disruptions are rarely smooth curves. A dot com event is likely to happen where intelligent capacity exceeds profitable use for a period of time.

The curve steepens quickly. More computational intelligence than humanity has ever generated will come online in our lifetimes. The question is whether our institutions can adapt before physics forces a slowdown… or before a winner in the AI race decides the rest of us no longer matter.

"The challenge presented by the prospect of superintelligence, and how we might best respond is quite possibly the most important and most daunting challenge humanity has ever faced. And-whether we succeed or fail-it is probably the last challenge we will ever face."

— Nick Bostrom

One of the most influential thinkers on existential risks and the future of humanity

REFERENCES:

The Singularity Is Nearer: When We Merge with AI – Ray Kurzweil

A Brief History of Intelligence by Max Bennet / Mariner Books

Ajeya Cotra published the most widely discussed study on AI matching the human brain. Cotra estimated a 50% probability that such “transformative AI” will be developed by the year 2040.

Global-energy outlooks | IEA – Energy and AI (2024). Sets the benchmark projection that data-centre electricity could double to ~945 TWh by 2030. | Download the PDF or read the web summary on the IEA site. IEA |

ITU / WBA – Greening Digital Companies 2024. Tracks the energy and Scope-3 emissions of 200 tech firms. | Free PDF on the ITU publications hub. ITU |

Semiconductor & device road-maps | IEEE IRDS 2024 Edition. Chapter tables show joules-per-operation targets, 3-D stacking timelines, and post-CMOS options out to 2040. | PDFs for each chapter are posted on irds.ieee.org. irds.ieee.orgirds.ieee.org |

CSET – AI and Compute Issue Briefs. Short, highly readable updates on compute races and national-security stakes. | All briefs free at cset.georgetown.edu. cset.georgetown.edu |

Industry & market analyses | McKinsey – The Cost of Compute (2024). Puts a $6.7 trillion price-tag on data-centre build-out through 2030. | Public article plus full report PDF on mckinsey.com. McKinsey & Company |

Uptime Institute – Global Data Center Survey 2024. Real-world PUE, AI workload share, staffing, and power-outage stats. | Executive summary PDF free after e-mail signup. Uptime Institute |

Government & research-lab road-maps | DOE (EES2 draft) – Energy-Efficiency Scaling for Two Decades. Lays out the U.S. target to cut joules/bit by 1 000 × via reversible and cryo-CMOS. | Posted as an open draft on energy.gov (search “EES2 roadmap”). iea-4e.org |

Main-stream narrative pieces | Business-Insider (Apr 2025) coverage of Epoch’s “city-sized power” forecast—good for a quick headline grasp of the stakes. | Search BI for the April 2025 article quoting Epoch AI. Vox |

Books for broader context | Chip War (Chris Miller) – why fabs & geopolitics drive the hardware race. Life 3.0 (Max Tegmark) – future-scenario chapters on compute limits.Powering the Future (Robert Socolow) – energy-transition primer that frames how many clean terawatts the world can add. |

Comments